Building a Retail Analytics Conversational UX with Databricks Genie

Retail success depends on precision and speed across the supply chain, yet many suppliers still operate with limited visibility into daily, store-level sales and inventory data. Databricks Genie changes that. As an AI assistant built directly into the Databricks Lakehouse, Genie allows COOs and business leaders to ask natural-language questions and receive instant, accurate answers grounded in governed data from Unity Catalog.

What is Databricks Genie?

Databricks Genie is an AI assistant built into the Databricks Lakehouse that lets business leaders and analysts ask natural-language questions about their company’s data — and get instant, trustworthy answers. It draws directly from governed data and metrics in Unity Catalog, so insights are accurate, secure, and consistent across teams. For a COO, Genie turns the Lakehouse into an on-demand operations analyst — surfacing KPIs like OTIF, stockout exposure, or fulfillment efficiency in seconds, without waiting on data teams.

How is Databricks Genie Useful in Retail?

When used in a retail organization, Genie acts as a conversational user interfaces (CUIs) that connect across OMS, WMS, TMS, and ERP systems.

Genie transforms the Lakehouse into a real-time operations cockpit—surfacing metrics like OTIF or stockout exposure on demand and enabling retail executives to move from question to action with speed, confidence, and consistency.

In this article I use Genie to build a conversational analytics interface on top of our Databricks retail medallion architecture for logistics.

Retail and Conversational User Interfaces

Conversational user interfaces (CUIs) built on top of a data lakehouse give retail COOs a direct, intelligent pathway from question to action—without the delays of traditional dashboards or manual report requests. By connecting seamlessly across OMS, WMS, TMS, ERP, and analytics layers, a CUI allows executives to ask complex operational questions in natural language, receive data-driven answers with full lineage and source citations, and, when appropriate, trigger workflows or corrective actions instantly. This turns the lakehouse into a real-time decision cockpit—streamlining daily operations, improving responsiveness to disruptions, and empowering leadership to move from insight to execution with unprecedented speed and confidence.

Databricks Genie spaces are a foundational building blocks on the Databricks platform for building conversational user interfaces.

Data Lakehouse Discipline

We use the data lakehouse foundation we built previously in this series. It includes a metric view for OTIF (On Time, In Full) within Databricks, which standardizes how delivery performance is calculated and reported. This ensures that when executives, analysts, or suppliers ask about OTIF performance, they are referencing a single, consistent definition across the organization—eliminating confusion and enabling faster, more aligned decisions.

To make this work, we deliberately map only the relevant datasets and metrics into the conversational layer rather than exposing the entire data estate. This focused approach allows the underlying language models (LLMs) to operate within well-defined boundaries, delivering more accurate and reliable answers. Databricks Genie strengthens this by linking natural language to metric views and query patterns, ensuring every request for a key KPI like OTIF uses the same trusted formula. The result is a consistent, intelligent data experience that drives operational precision across retail logistics.

Building a Genie Space for Retail Logistics KPI Analysis

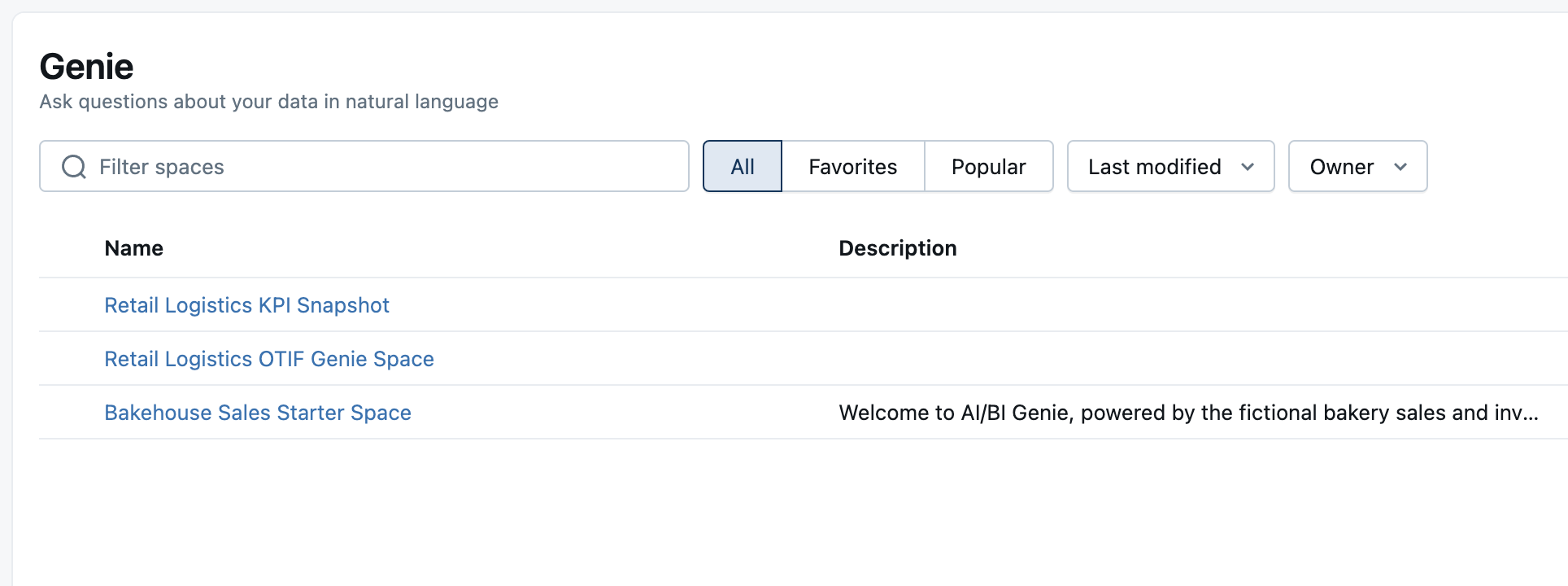

If you click on the "Genie" button on the left hand toolbar in your main Databricks platform dashboard, you should see a screen similar to the image below.

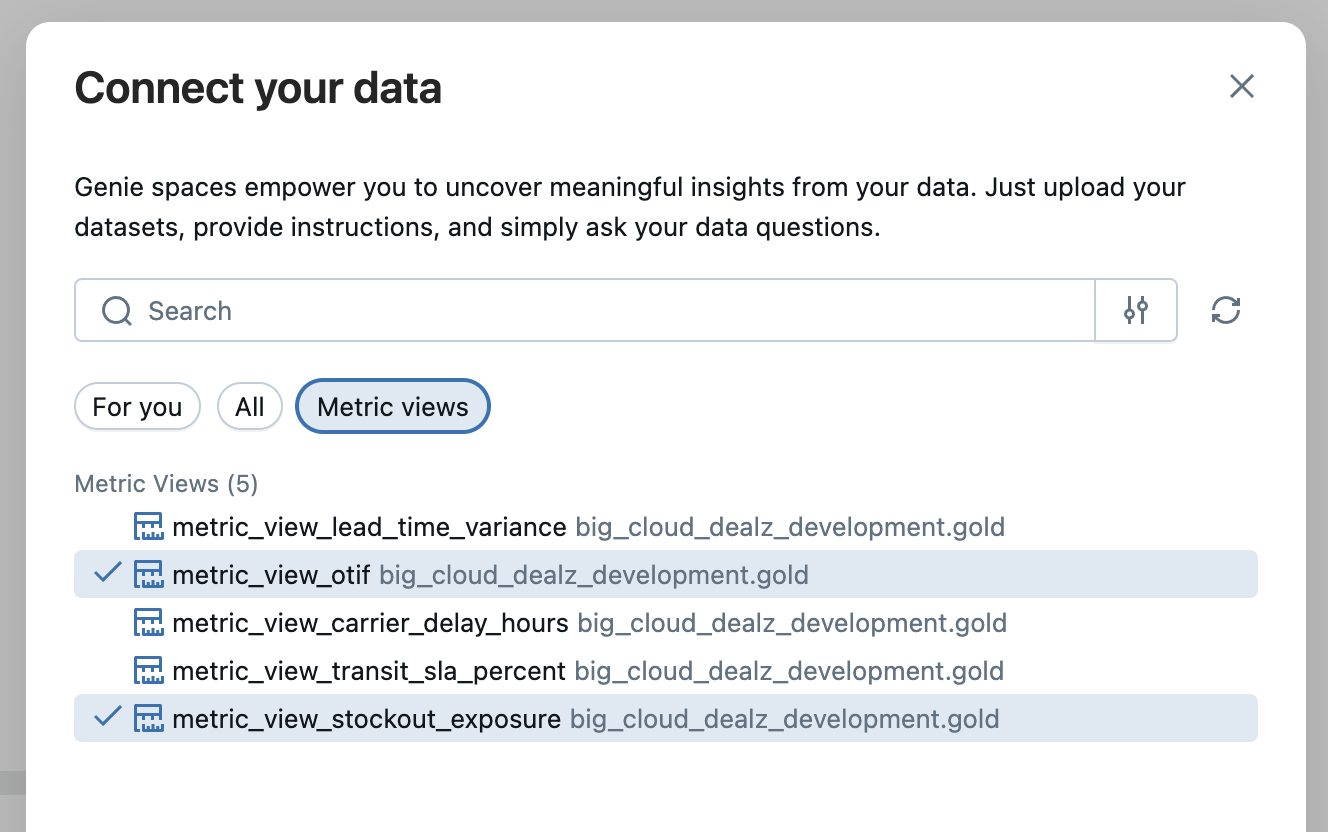

Click the "New" button in the upper right-hand corner of the Genie dashboard and you will be presented with a "Connect your data" modal popup as seen below.

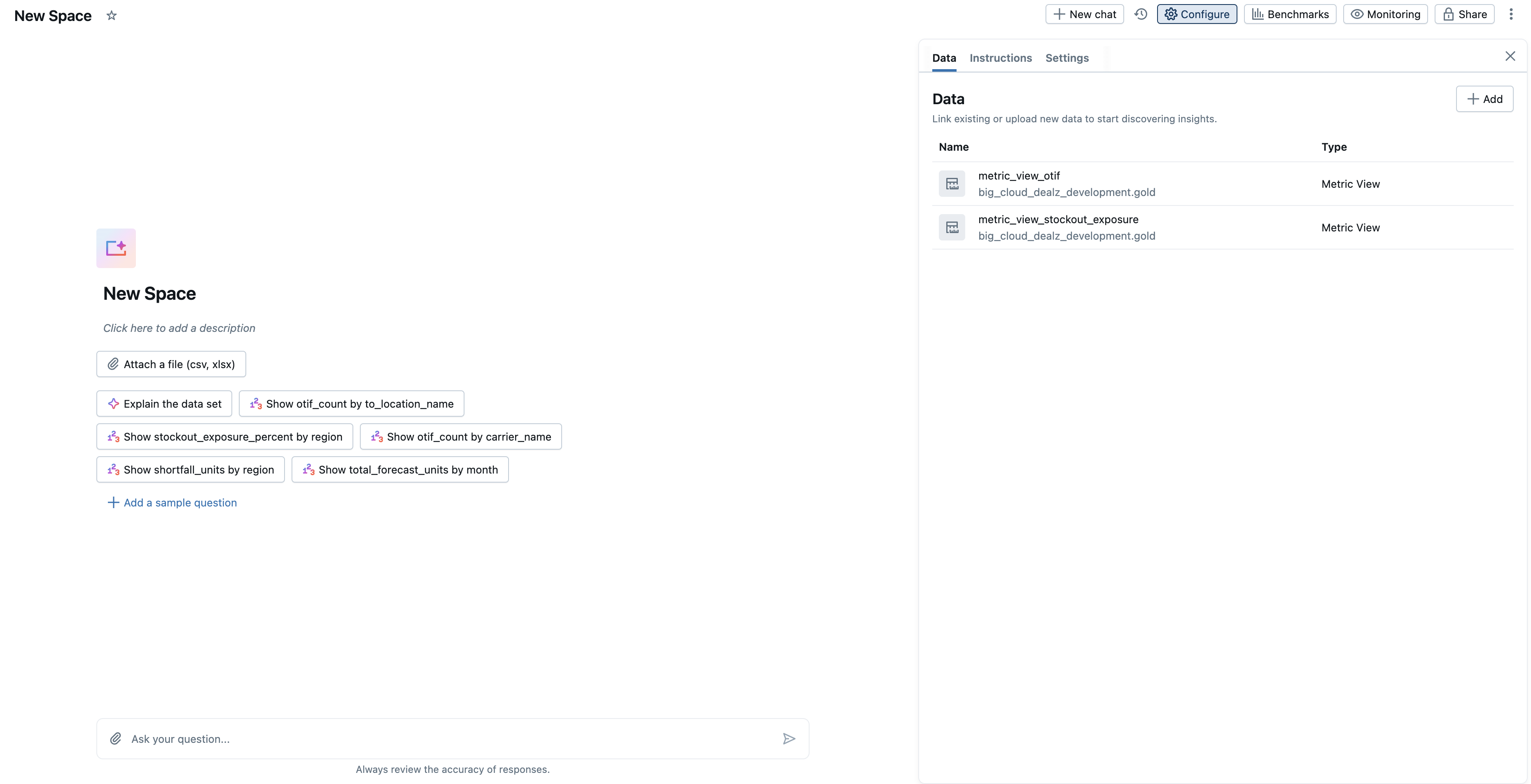

Since we've already done the hard work in modeling our data in a semantic view with metric views, click the Metric Views filter and select metric_view_otif and metric_view_stockout_exposure from the list and click next. You should see a Genie new space dashboard as seen in the image below.

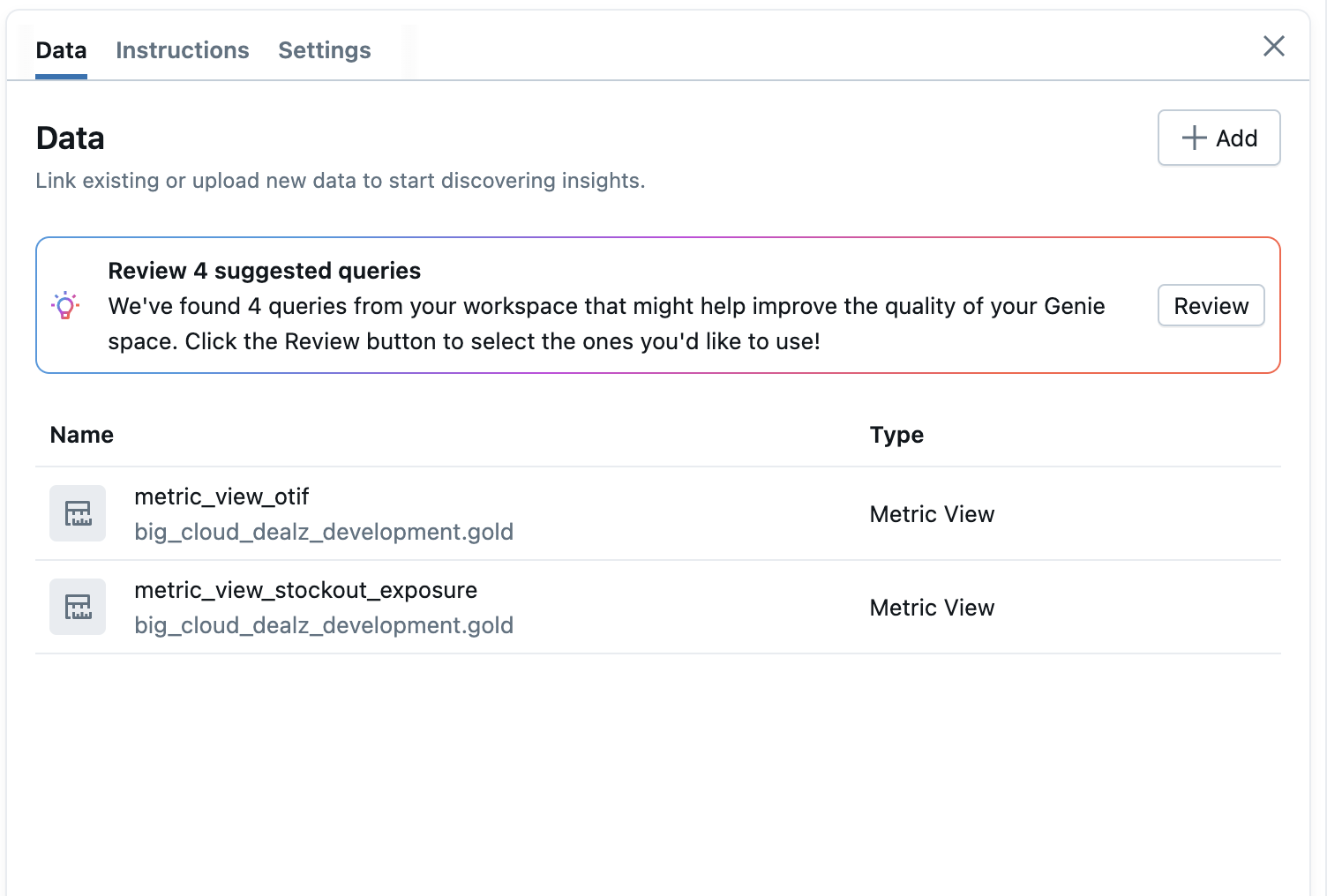

Before we move on, let's quickly check out the configuration panel on the right side as seen in the image below.

This is where we can continue to refine the configuration of our Genie space as we test the response in the chat area playground. Further, we can add specific text instructions for how the LLM some think about writing queries for our metric views. Many times questions will be asked in ways that the LLM will have to reason about the best way to customize the SQL query to answer the question, and this is where the power of metric views shines because they are useful for calculating multiple variations of similar answers.

It's Always About Focus

One great trick for reducing unintentional answers (e.g., "hallucinations") is to constraint down the options and variations for the LLM to consider when reasoning over your question and your contextual data.

Databricks is already doing some of this for you by providing you with constructs (e.g., "Genie") to use software to constrain an LLM to a specific task with specific data.

So if you use a well-modeled data lakehouse with metric views, you have consistency in formulas already. When you use Genie spaces, it further maps a set of queries to a set of metric views so when the LLM finally gets routed a specific type of question, the system is able to format the prompt in a tight, focused, and efficient way. This raises consistency in the answers generated in the AI / BI applications, supporting end users better.

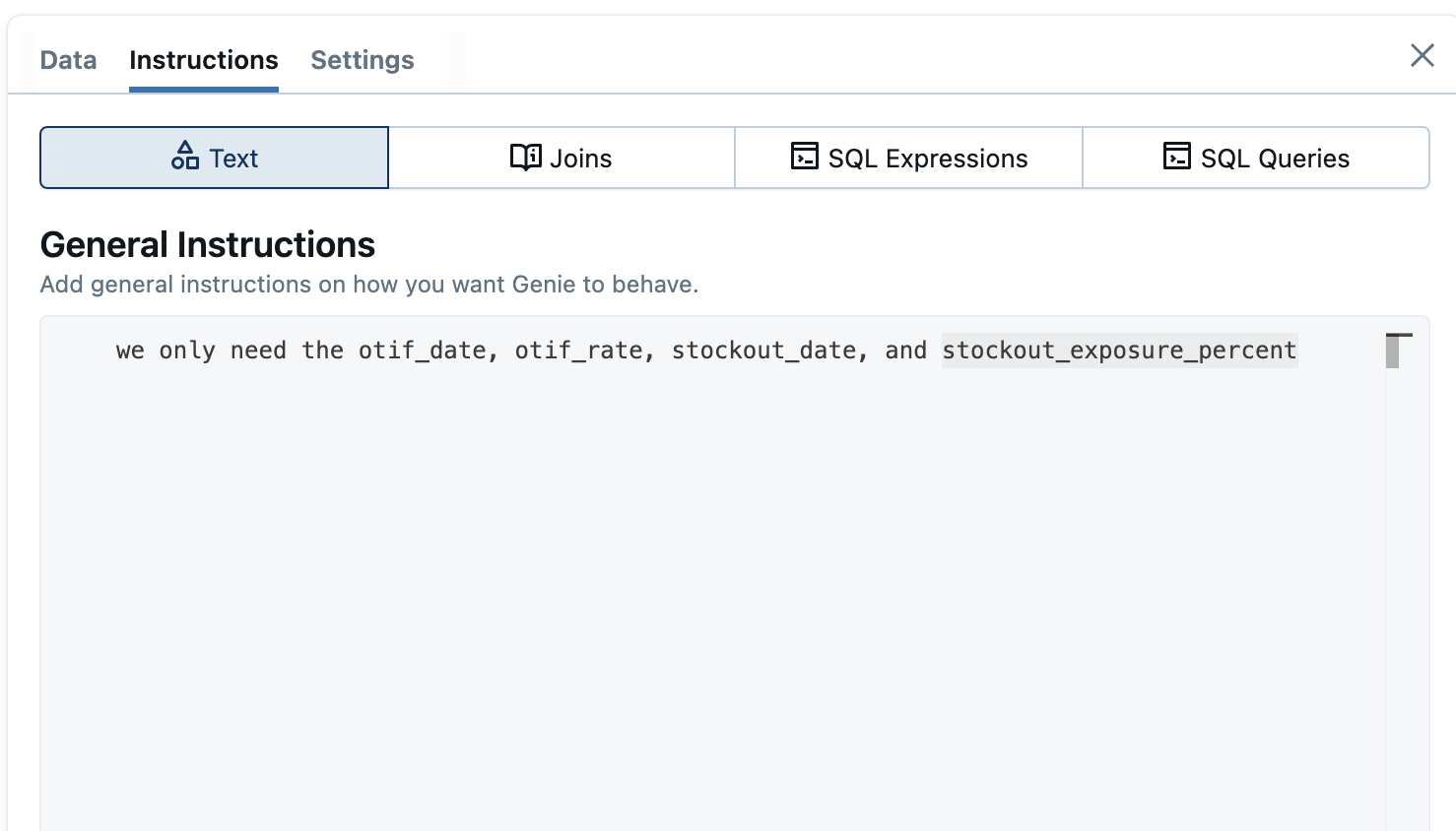

To add specific text instructions you can click on the instructions tab and write out how you want the system to focus on generating answers, as you can see below.

Basically we're reminding the system to only focus on these specific fields when asked about OTIF and stockout exposure. The nice thing about building Genie spaces is because we've done so much work on the Unity Catalog and metric view side of organizing the data, building LLM-based conversational user interfaces for our data lakehouse is not that hard with Databricks Genie.

"The dirty secret of AI is that the hardest part is the data.”

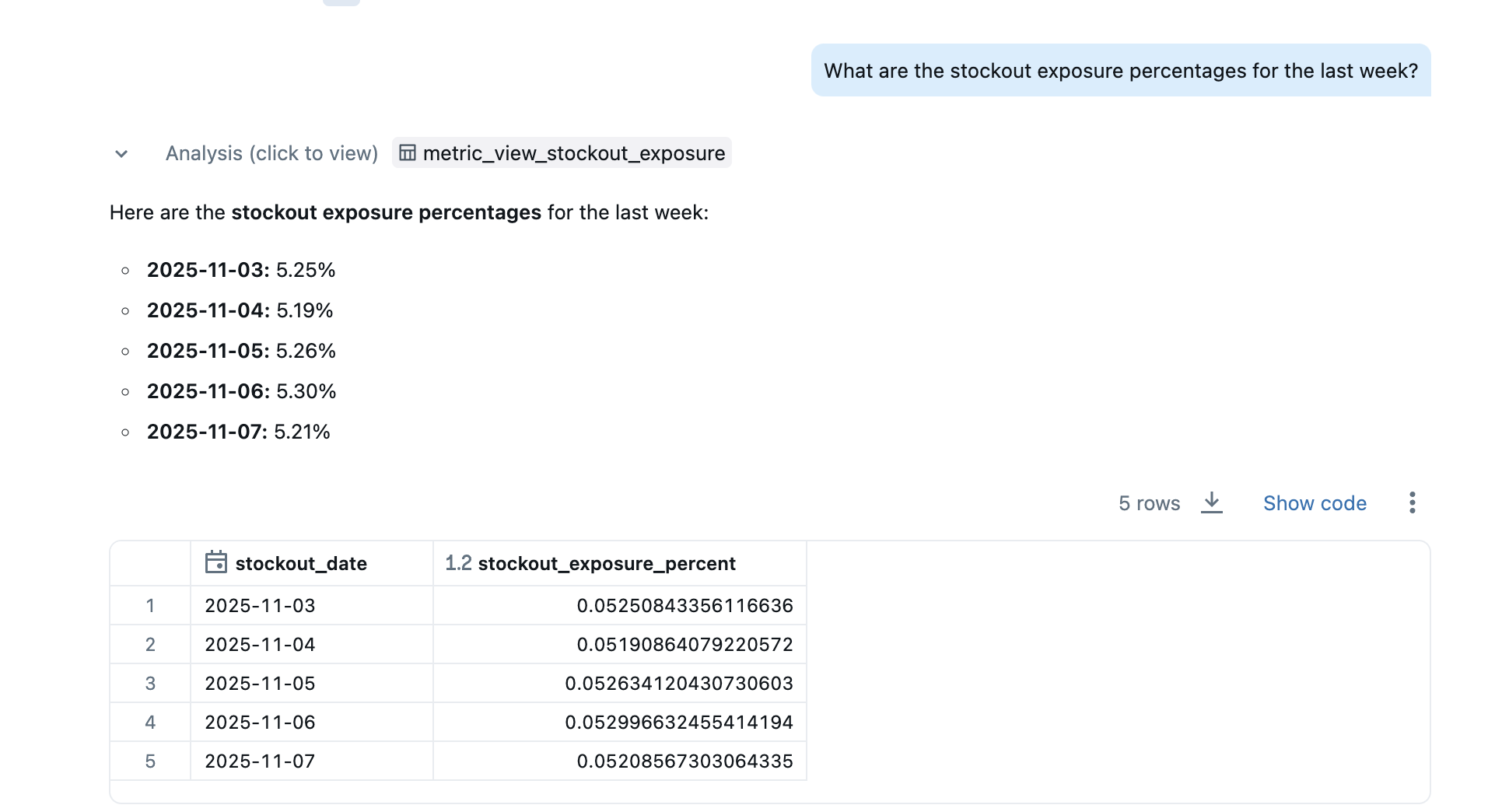

Ok, enough exposition. Let's do a quick test with the query "What is the stockout exposure for the last week?"

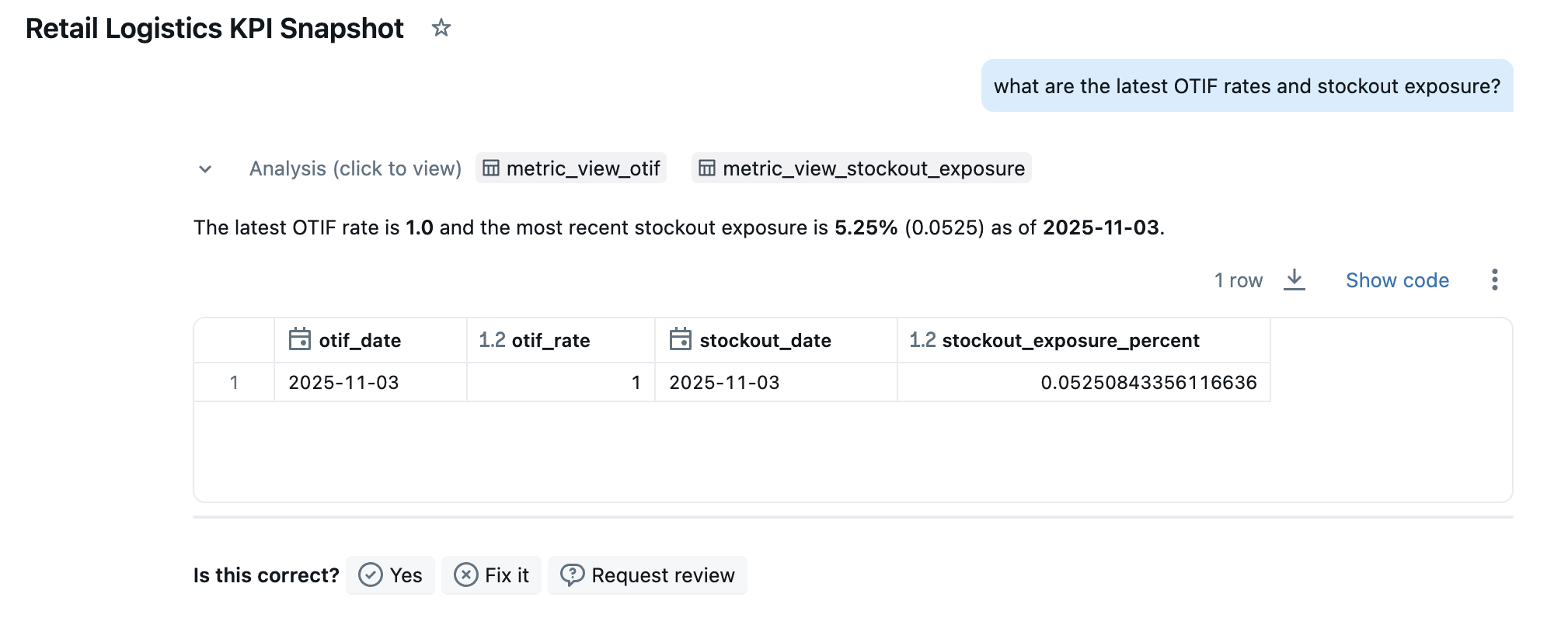

Now, thinking ahead to where we want to go in the next article, let's do try the query "What are the latest OTIF rates and stockout exposure?"

And that's all there is to it. We did all the hard work by getting our metric views put together and then the text metadata mapping in Genie configuration does the rest with LLMs.

Summary and Next Steps

Databricks Genie spaces make it easy to build quick, accurate, and consistent conversational user interfaces for your data lakehouse tables, views, and metric views. However, its mostly meant for use by analysts and business leaders.

What if we want to take the next step and automate the analysis of multiple KPIs at once to accelerate decision making with custom business rules? For more on that, check out the next article on "Accelerating Retail Decision Intelligence with Agent Bricks".

Next in Series

Deploying RAG Retail Knowledge Assistant for Product Sales Support with Agent Bricks

This article demonstrates how to build and deploy a Databricks Agent Bricks knowledge assistant that uses Retrieval Augmented Generation (RAG) to answer complex, real-time product questions from plain text documents, enabling faster, more confident retail customer interactions.

Read next article in series